Android includes support for high performance 2D and 3D graphics with the Open Graphics Library (OpenGL), specifically, the OpenGL ES API. OpenGL is a cross-platform graphics API that specifies a standard software interface for 3D graphics processing hardware. OpenGL ES is a flavor of the OpenGL specification intended for embedded devices. The OpenGL ES 1.0 and 1.1 API specifications have been supported since Android 1.0. Beginning with Android 2.2 (API Level 8), the framework supports the OpenGL ES 2.0 API specification.

Note: The specific API provided by the Android framework is similar to the J2ME JSR239 OpenGL ES API, but is not identical. If you are familiar with J2ME JSR239 specification, be alert for variations.

Android supports OpenGL both through its framework API and the Native Development Kit (NDK). This topic focuses on the Android framework interfaces. For more information about the NDK, see the Android NDK.

There are two foundational classes in the Android framework that let you create and manipulate

graphics with the OpenGL ES API: GLSurfaceView and GLSurfaceView.Renderer. If your goal is to use OpenGL in your Android application,

understanding how to implement these classes in an activity should be your first objective.

GLSurfaceViewView where you can draw and manipulate objects using

OpenGL API calls and is similar in function to a SurfaceView. You can use

this class by creating an instance of GLSurfaceView and adding your

Renderer to it. However, if you want to capture

touch screen events, you should extend the GLSurfaceView class to

implement the touch listeners, as shown in OpenGL Tutorials for

ES 1.0,

ES 2.0 and the TouchRotateActivity sample.GLSurfaceView.RendererGLSurfaceView. You must provide an implementation of this interface as a

separate class and attach it to your GLSurfaceView instance using

GLSurfaceView.setRenderer().

The GLSurfaceView.Renderer interface requires that you implement the

following methods:

onSurfaceCreated(): The system calls this

method once, when creating the GLSurfaceView. Use this method to perform

actions that need to happen only once, such as setting OpenGL environment parameters or

initializing OpenGL graphic objects.

onDrawFrame(): The system calls this method on each redraw of the GLSurfaceView. Use this method as the primary execution point for

drawing (and re-drawing) graphic objects.onSurfaceChanged(): The system calls this method when the GLSurfaceView geometry changes, including changes in size of the GLSurfaceView or orientation of the device screen. For example, the system calls

this method when the device changes from portrait to landscape orientation. Use this method to

respond to changes in the GLSurfaceView container.

Once you have established a container view for OpenGL using GLSurfaceView and GLSurfaceView.Renderer, you can begin

calling OpenGL APIs using the following classes:

android.opengl - This package provides a static interface to the OpenGL ES

1.0/1.1 classes and better performance than the javax.microedition.khronos package interfaces.

javax.microedition.khronos.opengles - This package provides the standard

implementation of OpenGL ES 1.0/1.1.

android.opengl.GLES20 - This package provides the

interface to OpenGL ES 2.0 and is available starting with Android 2.2 (API Level 8).If you'd like to start building an app with OpenGL right away, have a look at the tutorials for OpenGL ES 1.0 or OpenGL ES 2.0!

If your application uses OpenGL features that are not available on all devices, you must include these requirements in your AndroidManifest.xml file. Here are the most common OpenGL manifest declarations:

<!-- Tell the system this app requires OpenGL ES 2.0. -->

<uses-feature android:glEsVersion="0x00020000" android:required="true" />

Adding this declaration causes the Android Market to restrict your application from being installed on devices that do not support OpenGL ES 2.0.

<supports-gl-texture>. For more information about available texture compression

formats, see Texture compression support.

Declaring texture compression requirements in your manifest hides your application from users

with devices that do not support at least one of your declared compression types. For more

information on how Android Market filtering works for texture compressions, see the

Android Market and texture compression filtering section of the <supports-gl-texture> documentation.

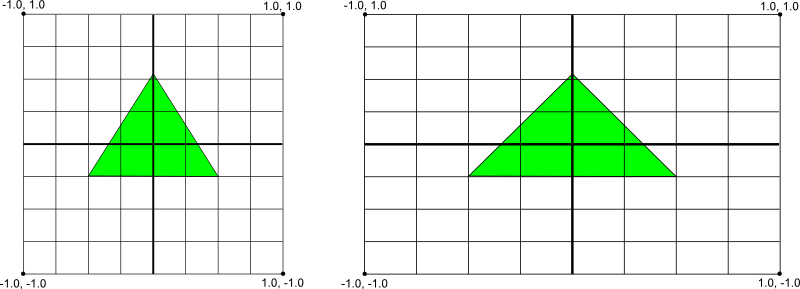

One of the basic problems in displaying graphics on Android devices is that their screens can vary in size and shape. OpenGL assumes a square, uniform coordinate system and, by default, happily draws those coordinates onto your typically non-square screen as if it is perfectly square.

Figure 1. Default OpenGL coordinate system (left) mapped to a typical Android device screen (right).

The illustration above shows the uniform coordinate system assumed for an OpenGL frame on the left, and how these coordinates actually map to a typical device screen in landscape orientation on the right. To solve this problem, you can apply OpenGL projection modes and camera views to transform coordinates so your graphic objects have the correct proportions on any display.

In order to apply projection and camera views, you create a projection matrix and a camera view matrix and apply them to the OpenGL rendering pipeline. The projection matrix recalculates the coordinates of your graphics so that they map correctly to Android device screens. The camera view matrix creates a transformation that renders objects from a specific eye position.

In the ES 1.0 API, you apply projection and camera view by creating each matrix and then adding them to the OpenGL environment.

onSurfaceChanged() method of a GLSurfaceView.Renderer

implementation to create a projection matrix based on the screen's aspect ratio and apply it to the

OpenGL rendering environment.

public void onSurfaceChanged(GL10 gl, int width, int height) {

gl.glViewport(0, 0, width, height);

// make adjustments for screen ratio

float ratio = (float) width / height;

gl.glMatrixMode(GL10.GL_PROJECTION); // set matrix to projection mode

gl.glLoadIdentity(); // reset the matrix to its default state

gl.glFrustumf(-ratio, ratio, -1, 1, 3, 7); // apply the projection matrix

}

onDrawFrame() method of a GLSurfaceView.Renderer

implementation to apply a model view and use the

GLU.gluLookAt() utility to create a viewing tranformation

which simulates a camera position.

public void onDrawFrame(GL10 gl) {

...

// Set GL_MODELVIEW transformation mode

gl.glMatrixMode(GL10.GL_MODELVIEW);

gl.glLoadIdentity(); // reset the matrix to its default state

// When using GL_MODELVIEW, you must set the camera view

GLU.gluLookAt(gl, 0, 0, -5, 0f, 0f, 0f, 0f, 1.0f, 0.0f);

...

}

For a complete example of how to apply projection and camera views with OpenGL ES 1.0, see the OpenGL ES 1.0 tutorial.

In the ES 2.0 API, you apply projection and camera view by first adding a matrix member to the vertex shaders of your graphics objects. With this matrix member added, you can then generate and apply projection and camera viewing matrices to your objects.

uMVPMatrix member allows you to apply projection and camera viewing

matrices to the coordinates of objects that use this shader.

private final String vertexShaderCode =

// This matrix member variable provides a hook to manipulate

// the coordinates of objects that use this vertex shader

"uniform mat4 uMVPMatrix; \n" +

"attribute vec4 vPosition; \n" +

"void main(){ \n" +

// the matrix must be included as part of gl_Position

" gl_Position = uMVPMatrix * vPosition; \n" +

"} \n";

Note: The example above defines a single transformation matrix member in the vertex shader into which you apply a combined projection matrix and camera view matrix. Depending on your application requirements, you may want to define separate projection matrix and camera viewing matrix members in your vertex shaders so you can change them independently.

onSurfaceCreated() method of a GLSurfaceView.Renderer implementation to access the matrix

variable defined in the vertex shader above.

public void onSurfaceCreated(GL10 unused, EGLConfig config) {

...

muMVPMatrixHandle = GLES20.glGetUniformLocation(mProgram, "uMVPMatrix");

...

}

onSurfaceCreated() and onSurfaceChanged() methods of a GLSurfaceView.Renderer

implementation to create camera view matrix and a projection matrix based on the screen aspect ratio

of the device.

public void onSurfaceCreated(GL10 unused, EGLConfig config) {

...

// Create a camera view matrix

Matrix.setLookAtM(mVMatrix, 0, 0, 0, -3, 0f, 0f, 0f, 0f, 1.0f, 0.0f);

}

public void onSurfaceChanged(GL10 unused, int width, int height) {

GLES20.glViewport(0, 0, width, height);

float ratio = (float) width / height;

// create a projection matrix from device screen geometry

Matrix.frustumM(mProjMatrix, 0, -ratio, ratio, -1, 1, 3, 7);

}

onDrawFrame() method of a GLSurfaceView.Renderer implementation to combine

the projection matrix and camera view created in the code above and then apply it to the graphic

objects to be rendered by OpenGL.

public void onDrawFrame(GL10 unused) {

...

// Combine the projection and camera view matrices

Matrix.multiplyMM(mMVPMatrix, 0, mProjMatrix, 0, mVMatrix, 0);

// Apply the combined projection and camera view transformations

GLES20.glUniformMatrix4fv(muMVPMatrixHandle, 1, false, mMVPMatrix, 0);

// Draw objects

...

}

For a complete example of how to apply projection and camera view with OpenGL ES 2.0, see the OpenGL ES 2.0 tutorial.

The OpenGL ES 1.0 and 1.1 API specifications have been supported since Android 1.0. Beginning with Android 2.2 (API Level 8), the framework supports the OpenGL ES 2.0 API specification. OpenGL ES 2.0 is supported by most Android devices and is recommended for new applications being developed with OpenGL. For information about the relative number of Android-powered devices that support a given version of OpenGL ES, see the OpenGL ES Versions Dashboard.

Texture compression can significantly increase the performance of your OpenGL application by

reducing memory requirements and making more efficient use of memory bandwidth. The Android

framework provides support for the ETC1 compression format as a standard feature, including a ETC1Util utility class and the etc1tool compression tool (located in the

Android SDK at <sdk>/tools/). For an example of an Android application that uses

texture compression, see the CompressedTextureActivity code sample.

To check if the ETC1 format is supported on a device, call the ETC1Util.isETC1Supported() method.

Note: The ETC1 texture compression format does not support textures with an alpha channel. If your application requires textures with an alpha channel, you should investigate other texture compression formats available on your target devices.

Beyond the ETC1 format, Android devices have varied support for texture compression based on their GPU chipsets and OpenGL implementations. You should investigate texture compression support on the the devices you are are targeting to determine what compression types your application should support. In order to determine what texture formats are supported on a given device, you must query the device and review the OpenGL extension names, which identify what texture compression formats (and other OpenGL features) are supported by the device. Some commonly supported texture compression formats are as follows:

GL_AMD_compressed_ATC_textureGL_ATI_texture_compression_atitcGL_IMG_texture_compression_pvrtcGL_OES_texture_compression_S3TCGL_EXT_texture_compression_s3tcGL_EXT_texture_compression_dxt1GL_EXT_texture_compression_dxt3GL_EXT_texture_compression_dxt5GL_AMD_compressed_3DC_textureWarning: These texture compression formats are not supported on all devices. Support for these formats can vary by manufacturer and device. For information on how to determine what texture compression formats are on a particular device, see the next section.

Note: Once you decide which texture compression formats your application will support, make sure you declare them in your manifest using <supports-gl-texture> . Using this declaration enables filtering by external services such as Android Market, so that your app is installed only on devices that support the formats your app requires. For details, see OpenGL manifest declarations.

Implementations of OpenGL vary by Android device in terms of the extensions to the OpenGL ES API that are supported. These extensions include texture compressions, but typically also include other extensions to the OpenGL feature set.

To determine what texture compression formats, and other OpenGL extensions, are supported on a particular device:

String extensions = javax.microedition.khronos.opengles.GL10.glGetString(GL10.GL_EXTENSIONS);

Warning: The results of this call vary by device! You must run this call on several target devices to determine what compression types are commonly supported.

OpenGL ES API version 1.0 (and the 1.1 extensions) and version 2.0 both provide high performance graphics interfaces for creating 3D games, visualizations and user interfaces. Graphics programming for the OpenGL ES 1.0/1.1 API versus ES 2.0 differs significantly, and so developers should carefully consider the following factors before starting development with either API:

While performance, compatibility, convenience, control and other factors may influence your decision, you should pick an OpenGL API version based on what you think provides the best experience for your users.